In software development, user acceptance testing is a crucial aspect that ensures the final product is of high quality and meets the end-users’ expectations.

User Acceptance Testing (UAT), which is performed on most UIT projects, sometimes called beta testing or end-user testing, is a phase of software development in which the software is tested in the “real world” by the intended audience or business representative. This type of testing is not intended to be menu-driven, but rather to be performed by business users to verify that the application will meet the needs of the end-user, with scenarios and data representative of actual usage in the field.

User Acceptance Testing (UAT) is a critical phase in software development that checks whether the software meets its specific use-case requirements from a user’s perspective. Effective UAT testing helps teams identify potential issues and bugs before releasing the software to end-users. However, testing is not an easy task, and sometimes, teams make mistakes that can lead to suboptimal testing results. In this blog post, we’ll highlight ten of the most common User Acceptance Testing mistakes and how to avoid them.

01. Insufficient planning for User Acceptance Testing

A common UAT issue is not planning test cases and coordinating them effectively. Teams need to create a comprehensive test plan that lists all the features they will test and sets a timeline to complete it. Proper coordination and communication among testing teams, development teams and stakeholders help facilitate effective UAT testing.

02. Lack of clear and measurable UAT acceptance criteria

Without clear and measurable UAT acceptance criteria, the testing process becomes arbitrary. The most effective UAT acceptance criteria are measurable, quantifiable, and achievable. It should provide unambiguous definitions of what constitutes a pass/fail in each test case.

03. Choosing the wrong testers for UAT

UAT testing should involve end-users to ensure the software satisfies their specific user needs. Inadequate user representation and involvement can lead to a testing process that doesn’t meet end-users’ requirements, risking the failure of the software.

Failure to prioritize critical business processes and user journeys UAT testing requires prioritizing critical business processes and user journeys. Knowing which areas to prioritize ensures that you’re testing the most important functionalities and workflows first.

04. Neglecting to run UAT across various devices, browsers and operating systems

Testing on different devices, browsers, and operating systems helps ensure compatibility and the software’s functionality in different environments. Leaving out this critical testing step can lead to a software release with glitches that affect end-users’ experience.

05. Ignoring Edge Cases when performing User Acceptance Testing

Another common mistake during user acceptance testing is focusing only on standard workflows while neglecting unusual or extreme scenarios. This oversight can leave critical gaps in the application’s functionality, as it may fail under uncommon conditions. To ensure the system performs reliably, it’s essential to include edge cases and stress testing as part of the UAT process, verifying the application’s behavior in all possible situations.

06. Not conducting thorough User Acceptance Testing

Poor usability leads to a negative user experience, and in the end, users will shun that product. Usability testing is a critical aspect of UAT testing as it ensures that end-users can use the software with ease. Not doing usability testing can lead to a product that’s difficult to use, resulting in fewer users.

07. Overlooking security and data protection testing during UAT

Security and data protection testing are essential in today’s age of overblown cybercriminal activities. Poor security can lead to a data breach that can be detrimental to your business or organization. UAT testing should include a thorough security and data protection test for a product’s safety.

08. Ignoring performance and scalability testing during UAT

Performance and scalability testing ensure that the software performs optimally under different usage scenarios. Ignoring performance and scalability testing can lead to a product that doesn’t perform well under heavy usage, leading to a loss of users.

09. Not performing regression testing after bug fixes and other post UAT changes

Regression testing ensures that previously fixed bugs do not return and altered functionalities still work well. Neglecting comprehensive regression testing leaves the product susceptible to bugs and glitches that lead to a poor user experience.

10. Failing to document and communicate UAT issues and results effectively

After testing, teams need to document test results and communicate them effectively to relevant stakeholders. Failing to do this leads to ambiguity and poor decision making, resulting in a failure to meet customer needs.

Conclusion

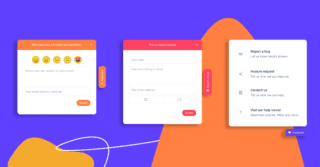

UAT testing is vital in ensuring software development leads to exceptional products. However, teams face several challenges that may lead to poor testing results and inefficiencies. Through proper coordination and planning, and choosing the right user acceptance testing (UAT) tool, testing teams can avoid common UAT issues and mistakes.

Clear communication with stakeholders, thorough testing, and documentation of test results ensure that the end-users have an exceptional user experience. By avoiding the ten common mistakes discussed in this blog post, you have a better chance of having an end product that maximizes customer satisfaction and business objectives.

10 Fixes for Bug Fixing

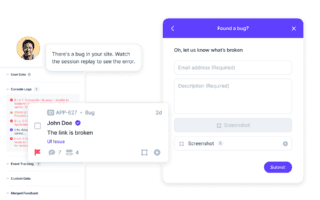

Whether you’re building something new or just need to keep an existing platform running smoothly, you need to be able to identify and rectify bugs fast.

In this guide, we’ll explore 10 reasons behind ineffective bug fixes and how to solve them, including:

- Setting up your automated bug fix feedback loop

- Where is it going wrong?

- Seeing their bugs with your own eyes (and ears)

- Take the guesswork out of what to do first